12 Oct 2023. See also Sam’s The Lessons of Fin for This Generation of AI Assistants retro.

From 2015 to 2019, I worked at Fin, where we were building what I would describe as “Uber for executive assistants.” The idea was that an EA can be incredibly valuable, but typically you only get access to this service if you can hire someone full time (40h / wk)

We thought it would be a long way before “AI” could deliver such a service, so we hired a team of people, built them powerful tools for managing a knowledge graph with all the essential details you need about someone if you’re gonna be their assistant, and gave anyone access under a “per per minute” pricing model.

Our team of assistants was incredibly efficient and the service was really delightful to use, but the business did not grow quickly enough to fulfill venture returns. The main problem was that even if you’re very efficient, it takes longer to do an even seemingly simple task – make a restaurant reservation or schedule a meeting for a few people – than you think.

Since the release of ChatGPT, several people have sent me questions about the Fin Assistant service (as they are planning to build a new and similar type of service on top of GPT-x).

This would be great and I hope someone makes it happen, because I miss the Fin Assistant!

I have started posting the questions and answers here – along with a full archive of the old fin.com blog – for anyone working on something like this to make use of.

If you have a question that is not answered here, submit it to this Google Form and if I know the answer I’ll add it to this page.

- FAQ

- fin slide archives

- Sam’s Retro

- fin blog archives

- Introducing Fin // 17 Aug 2015

- The Fin Exploration Company Charter // 10 Sep 2015

- On Bots, Conversation Apps, and Fin // 21 Jan 2016

- AI and AAI // 28 Jan 2016

- Everything I Have Learned about Making Engineering Performance Reviews Efficient, Effective, and Fun // 23 Oct 2017

- Building Hybrid Intelligence Systems - 2017 Fin Annual Letter // 20 Nov 2017

- 1. Shared memory tools make teams smarter and better than any individual can be alone.

- 2. Checklists help even the best humans get things right.

- 3. Using personal context to do the ‘right’ thing for each customer is table stakes for doing work as high quality as a human assistant would.

- 4. Leverage data to reduce variance in human systems.

- 5. Computers are better at math than humans.

- 6. Humans are the universal API.

- 7. Closing thoughts: hybrid intelligence systems should outperform pure software and isolated individual humans.

- Intelligent Work Routing, Dedicated Account Managers, and New Feedback Channels // 13 Feb 2018

- Improving Operations Quality through Measurement and Performance Management // 15 Apr 2018

- Finding the Right Person for the Job: Matching Requests of Different Types to Workers with Different Skills // 12 Jun 2018

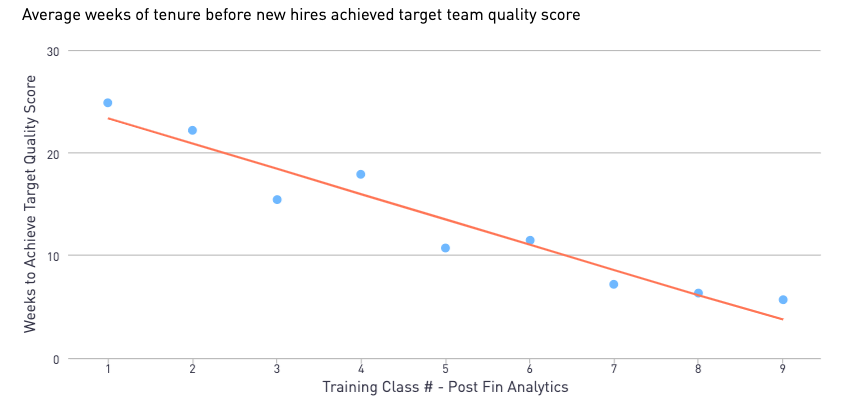

- Fin has gotten dramatically higher quality and lower cost over the past few months // 18 Jun 2018

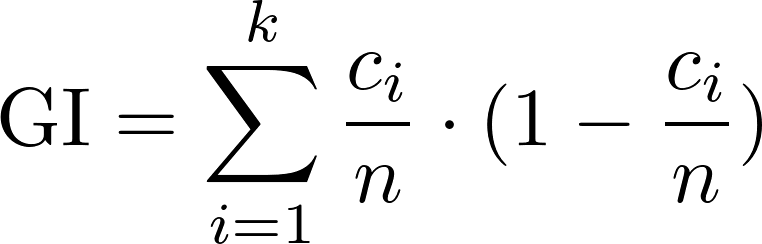

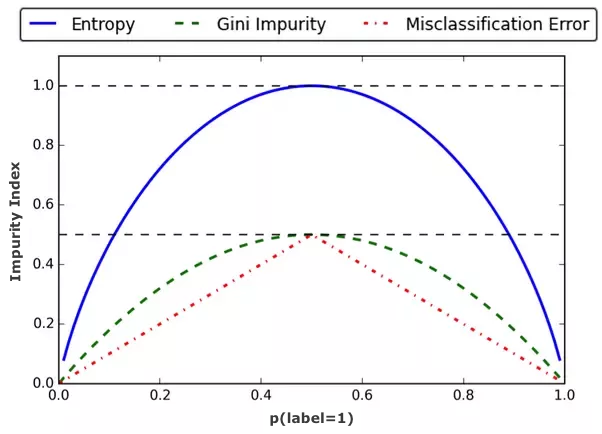

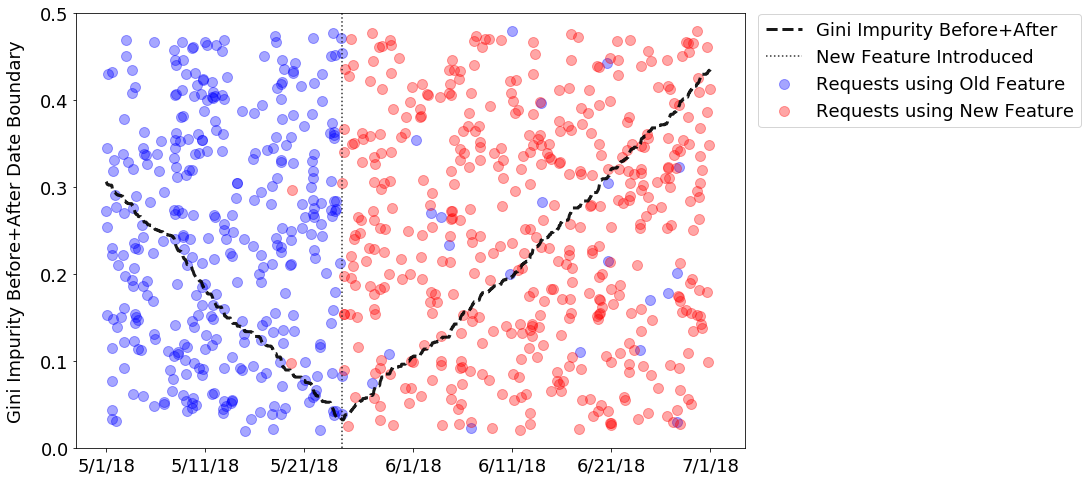

- Identifying Feature Launch Dates Using Gini Impurity // 07 Jul 2018

- 2018 Fin Annual Letter // 31 Oct 2018

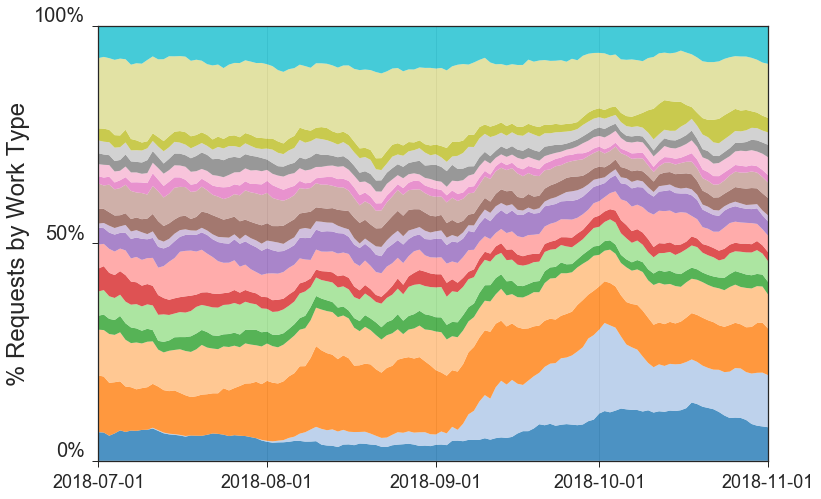

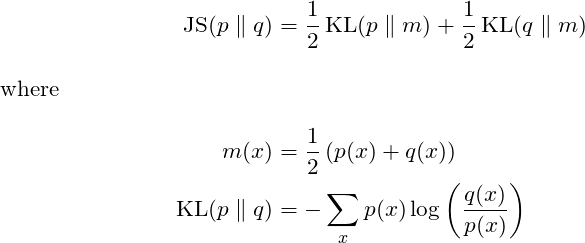

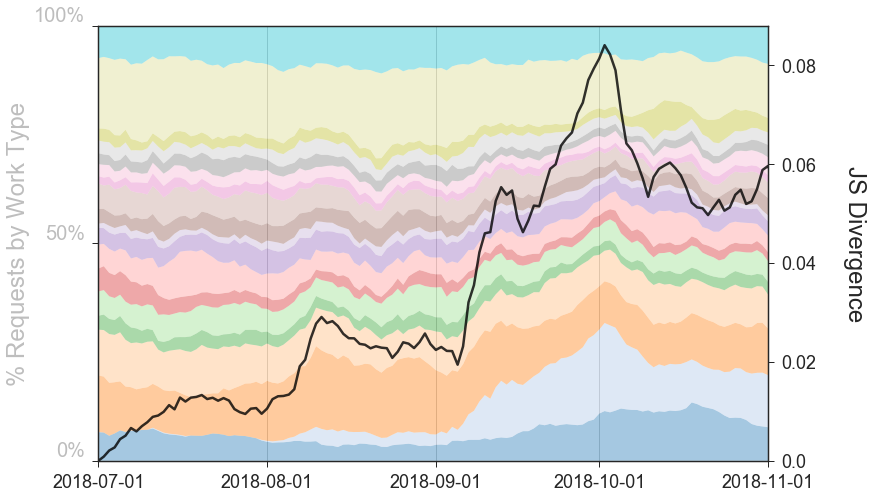

- Measuring Work-Mixture Changes using Jensen Shannon Divergence // 04 Nov 2018

- Fin’s Plan for 2019 // 18 Dec 2018

- Talking Fin Analytics with Harry Stebbings // 04 Feb 2019

- AAI 2018 Conference // 05 Feb 2019

- Why You Need to Invest in Analytics Before You Invest in RPA // 24 Feb 2019

- CX and Ops Teams Need Full Funnel Metrics // 18 Mar 2019

- Creating Metrics for Diverse Workstreams // 26 Mar 2019

- Driving Success Metrics with an Operations Flywheel // 16 Apr 2019

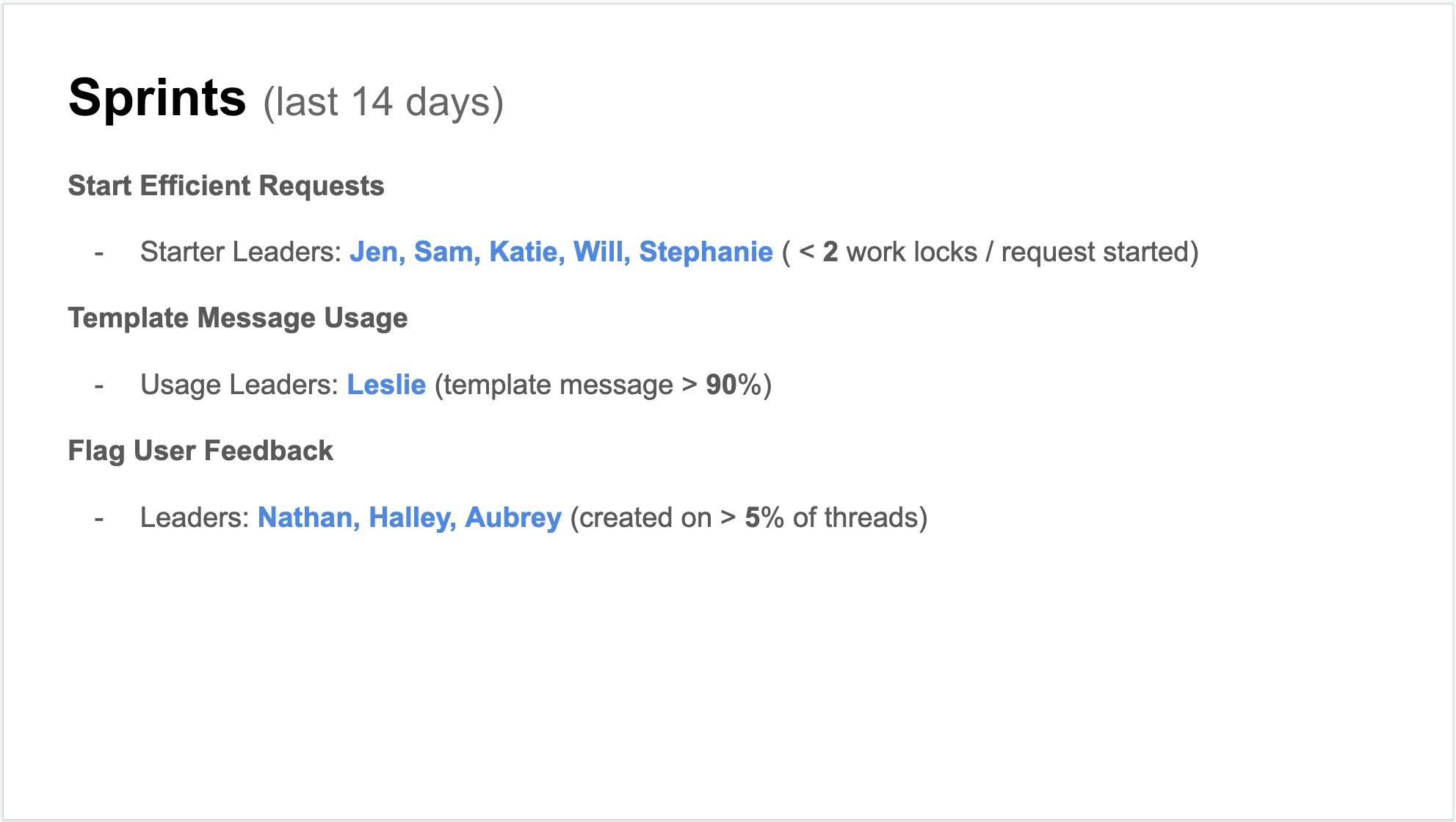

- Agent Metrics Overload: Scorecards, Focus Sprints, and All-Stars // 06 May 2019

- Get the Rest of Your Org on the ‘Front Lines’ with Your CX Agents // 13 May 2019

- Increase Team Productivity with Comprehensive Process Data // 23 Jul 2019

- How to Choose CX Metrics that Drive Real Business Outcomes // 25 Sep 2019

- How to Organize Operational Data for Maximal Insights // 03 Oct 2019

- Identify and Prioritize Opportunities for Workflow Improvements // 14 Oct 2019

- AAI 2019 Conference // 01 Nov 2019

- Opening Remarks: AAI Year In Review

- The State of Knowledge Labor

- The State of Operations Tools

- RPA and Automation

- Next Generation End-User Services

- The Startup Path to the Future vs. Enterprise Modernization

- Measurement and Optimization Strategies

- Fireside Chat: Amar Kendale & Jessica Lessin

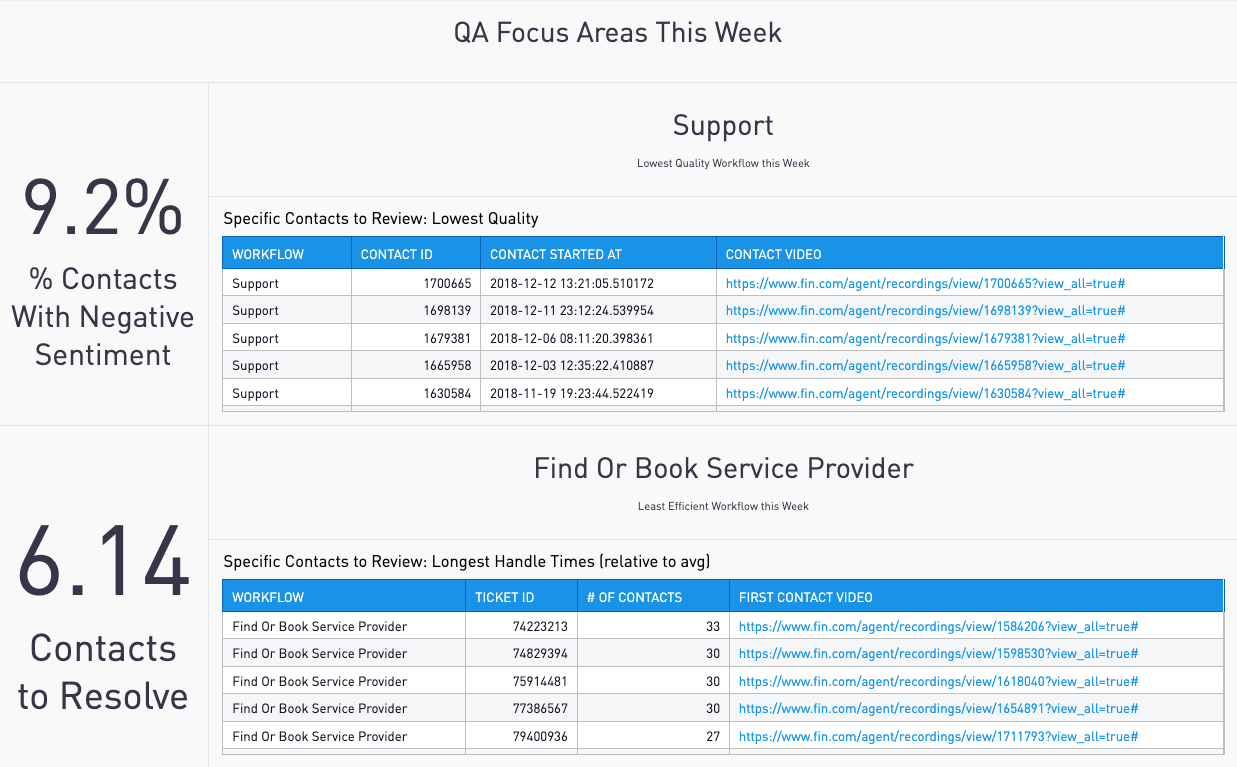

- QA strategies & debugging people, process, and tools in AAI systems

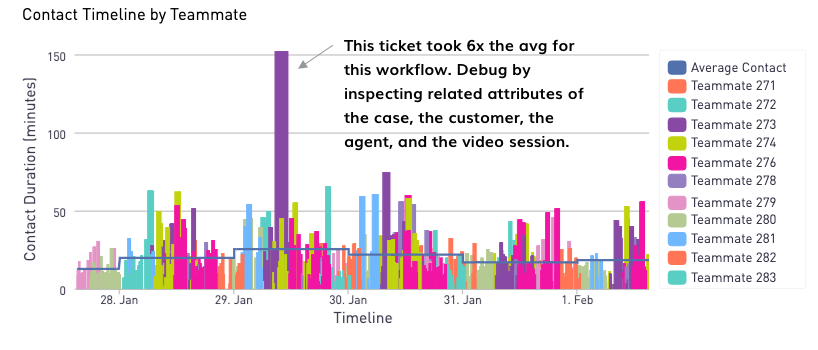

- Handling Ticket Outliers in Operational Settings // 06 Nov 2019

- Discovering Best Practices From Your Team // 12 Dec 2019

- Benchmarks and Approaches for Managing Team Engagement // 12 Dec 2019

- Can’t find an answer?

FAQ

Why did Fin shut down?

Although our team was very efficient, it was still more expensive than most customers wanted. I imagine you could do something far cheaper and maybe slightly less capable using gpt4 plugins these days and maybe find a market.

You would certainly get me as a customer if you built a great assistant today!

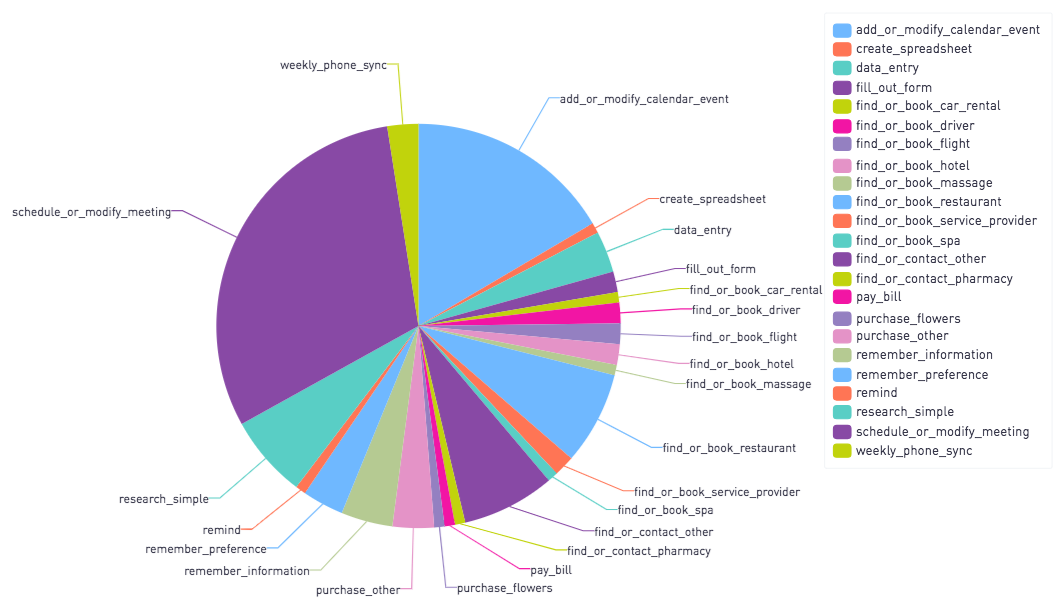

What kinds of things did people ask Fin to do?

I dug up this “Workflow Fingerprint” for a user that shows the major categories and subcategories of tasks we performed:

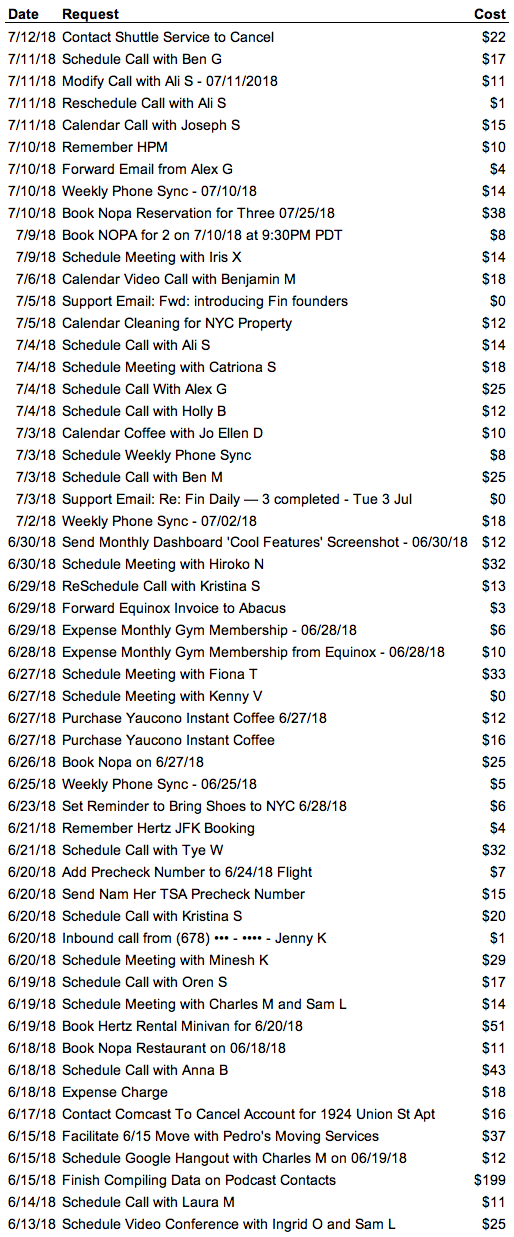

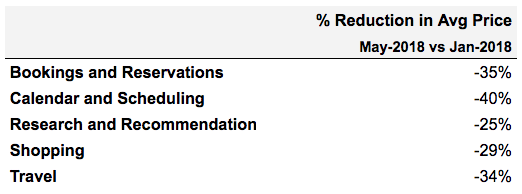

How much did tasks cost?

Here are some screenshots I found from 2018:

How were you able to get customers to share sensitive information with you?

The more you trust an assistant with sensitive information, the more they can do for you / the more time they can save you, eg:

- they need read / write access to manage your calendar

- they need your credit card number to buy things for you

- they need your home address to have things shipped to you

- they may need a photo your drivers license or passport to book cars or tips for you

- etc, etc, etc

Very few people started by granting full access. They would typically start out with tasks requiring less sensitive information, eventually ask for something that required more sensitive information, and at this point if the task was valuable enough to them and they trusted us they would provide the necessary sensitive info.

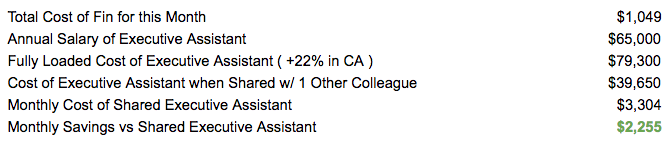

What kind of tools did you build?

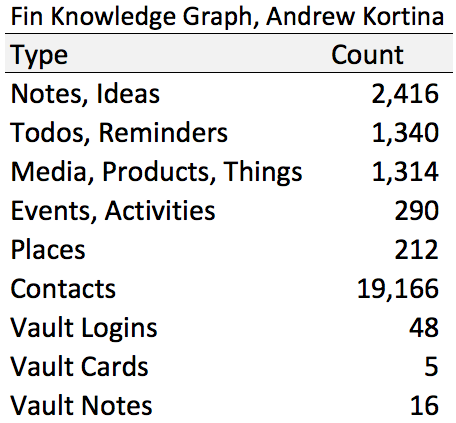

One of the main challenges in trying to enable a team of people to operate AS IF THEY SHARE A BRAIN is context maintenance.

For example, when you tell your human assistant, “I always try to schedule a 15 minute buffer between calls but a 30 minute buffer between in person meetings – and obviously account for travel time…”

This is relatively easy for one person to track, much harder to store in a structured way and present ONLY WHEN NECESSARY – because for any given person, they eventually have hundreds of nuanced preferences and you cannot expect an assistant servicing a request for them for the first time to read through every single one of them!

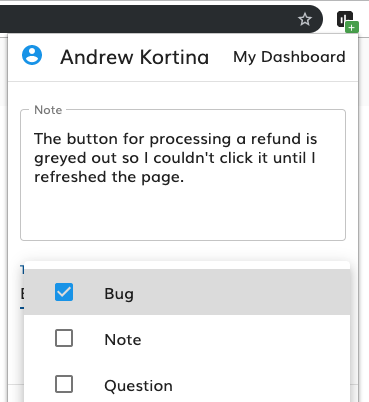

Here are some screenshots of dashboards we built and workflows to create structured data and then output it into templates for communicating with customers.

Notes on this one:

- the “Typical Price Range: $10 - $32” was computed based on all the attributes of this request and a machine learning model we called “price book” and showed to customer to help them anticipate the cost of a task

- addresses, geo coordinates, calendar events, payment methods – all of this was structured and saved in the graph (and if sensitive, stored in a “Vault” – whenever an agent accessed an item in the Vault, it was audit logged so customers could review access to sensitive data)

- all these form fields had type-ahead auto-completions

- the red text is product feedback for the team of agents managing workflows

- you can see, a lot of the structured data is geared towards helping human agents transmit context to one another

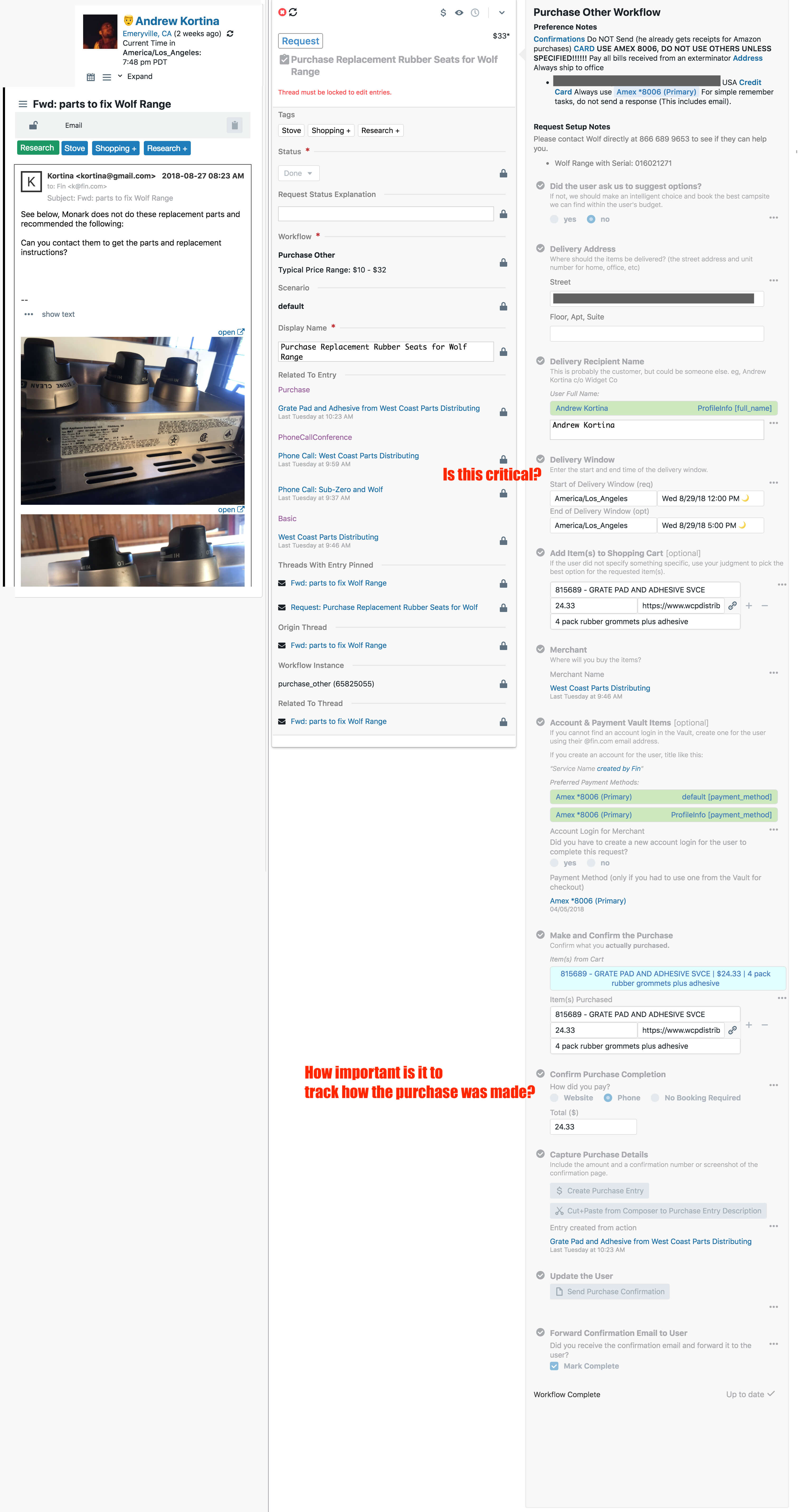

Here is the workflow for scheduling or modifying a meeting (lumped into a single workflow because they are almost identical tasks).

Note there were deep integrations into the calendar to automate the placing and removing of “HOLD” events on the user calendar to prevent double bookings:

Another workflow to find or book a driver:

Here is an example of the tool our best agents used to build “workflows” – forms that encapsulated the best practices for each type of task and were able to output structured data into templates:

fin slide archives

Here are a few slide shows I dug up:

- Fin World Tour 2017: A System and Method for Performing Heterogeneous Knowledge Work

- Fin Analytics

- The Operational Flywheel: The Science of Continuous Improvement for CX Metrics

Sam’s Retro

fin blog archives

Introducing Fin // 17 Aug 2015

A few months ago, the two of us started exploring an idea: “Mobile devices are extremely powerful, but apps are a crude method of interaction. In sci fi, people just talk to machines like they talk to people. That is the future.”

We don’t want to wait for the future. We want something like the OS from Her today. To this end, we’ve started The Fin Exploration Company, with a small team of wonderful people.

When we started, our goal was to remove friction and tedious tasks from our lives. But what has emerged from our prototype is more than this.

After interacting with Fin for a few weeks, I’ve been surprised to find that it does not feel like software. Fin feels like a person — actually, it feels like a multiplicity of people, almost like a city…

Fin grows and learns. Fin has an opinion. Fin surprises me and challenges me. Talking to Fin is a real conversation, not just a query and response or command dispatch.

If developing this kind of intelligent entity excites you, email us. We are hiring engineers and are always looking for new teammates who share our grit, curiosity, and voracious appetite for life.

– Sam and Kortina

The Fin Exploration Company Charter // 10 Sep 2015

We believe that people will someday interact seamlessly with the universe of information and services through natural speech assisted by an “agent” that knows everything about us. The final interface is no interface. This is not a novel vision; it is one that has persisted through generations of science fiction. Many organizations are currently working on the deep problems that should eventually - given enough time and effort - deliver this future.

We don’t want to wait.

Fin (The Fin Exploration Company) has the charter to sail due west from port San Francisco with a small veteran crew seeking a shorter route to the end game of technology. Our mission is to determine if we can build a valuable and useful service in 2015 that models and can eventually develop into the “end”.

We will get to market this year by releasing three key constraints:

-

First, in due time, we believe that this service will be primarily delivered by AI. But to make this possible today, we believe in primarily leveraging the most powerful intelligence currently known. People.

-

Second, following from that, we believe that this service will eventually be available to everyone at zero cost. But to make it possible today we believe in starting with an expensive subscription product that will initially only be available to the few, not the many.

-

Third, in due time we believe that this service will be able to efficiently interface with the marketplace of all the other services in the world, to provide for people on-demand and seamless real world goods, products and services; however, the first step in the journey will be fully focused on determining if a stand-alone information service will provide enough value to justify the expense of providing the service today.

We intend to be rigid and dogmatic on two key points:

-

We will not divert course from a path that we believe leads to the end game. As we sail we will likely make discoveries that will speed our journey, which we will embrace wholeheartedly. What we will not do is compromise on the mission or divert course for safe harbors we may find along the way. We may evolve our charted course, but we do not believe in pivots. This means we will always bias towards how we expect AI will work at scale, and bias away from application specific user interface.

-

We do not believe in negative gross margins or inflection points. Over time technology can improve the quality of service and margins, but there are no magic corners in great services or businesses where zeros become ones. Therefore, as we sail we will not subsidize the actual cost of service to users. We believe that without the rigor of gross margin, it is too easy to lose your way.

We believe in a specific form of navigation:

The most important part of any expedition is how you choose to navigate and therefore measure your progress. Our core belief is that any product that does not have consistent daily use is destined to ultimately fail. Thus, we intend to optimize in this first expedition for daily users and daily use, measured in terms of writes per paid subscriber.

For us to consider this exploration to be successful we need a small set of people to be paid subscribers who use the product at least 5 days a week, with a positive curve on the number of uses per day. To be sure, the key is to achieve these metrics on our specific product and vision, not just any product.

If we can achieve that end, we will be confident that we are on the right course. If not then we will be skeptical. Because we will not broadly launch, we expect to be the first two daily users and expect the remainder of the set to be in New York and San Francisco and likely be our friends and friends-of-friends. We are not looking for broad user adoption or appeal; we are looking for real and measurable use from a small set of people, not just intellectual lip-service.

That said, while we have a clear sense of where we want to go and what metrics to watch, we will be leading day-to-day by feel and our own sense of dead reckoning based on our collective product experience. We will be observing how people behave/act as opposed to overly reacting to opinions people verbalize.

Columbus spent years arguing for and training for his first mission to try to find a shorter route to Asia. He sailed without seeing land for just 29 days. The actual expedition that found the new world was just 29 days long. This is the model we intend to follow. A carefully planned sprint, not a marathon.

In this respect we expect that this initial charter will be quite short-lived. If we sail for a few months and find something very special, then we will evolve into a development company rather than an exploration company and devote decades to building a product that will hopefully eventually deliver the future to millions… But what we are staffing and financing today is a crazy, very high risk, expensive expedition, not the hard work of development.

We are confident in our vision and strategy, but we sincerely acknowledge that we will likely fail, and want to impress on all those that embark on this journey with us a simultaneous sense of confidence and expectation of complete failure. We can make strong arguments to ourselves that the time has come for this expedition, but we also are fully aware that those that have come before us and failed held similar perspectives. If we are unable to find what we are looking for, we will regroup, put together another expedition, and sail again.

– Sam

On Bots, Conversation Apps, and Fin // 21 Jan 2016

2016 is being declared the year of bots. And it feels like there is a broad shift in the developer ecosystem away from traditional point-and-click apps, towards chat-based user interfaces.

It’s happening because there is broad consumer and developer fatigue with apps. Consumers don’t want to install or use new traditional apps. And partially as a result, developers are faced with rising distribution costs.

At the same time, the American platform companies are preparing for a new post-app battleground modeled after the Asian messaging services. Companies like Slack are looking at chat-as-platform as a major next step. Facebook is banking on its messaging properties (Messenger and WhatsApp) to get back into the app platform game.

The many-billion-dollar question, however, is whether the transition to bots and conversational interfaces will represent a major point of disruption or more of an evolution in the interface paradigm.

In the last few decades we have seen several moments of big platform turnover. There was the move from shrink-wrapped software on desktops dominated by Microsoft to the free-for-all of the Web. Then there was the move from the Web to the app world dominated by Apple and Google. And now we are at the start of the move from apps to bots and conversations.

The bet I am making, both as an investor and operator, is that the 2016 bot paradigm shift is going to be far more disruptive and interesting than the last decade’s move from Web to mobile apps, though perhaps not as important as the move from shrink wrap to Web.

The Last Big Shift: 2008

In 2008 Apple opened up the app store and began to usher in the move away from the Web and back towards client software in the form of downloadable apps.

Developers had to deal with new languages, new approval processes and to remember how to deal with all the cost and complexity of developing and maintaining client software.

But it was clearly worth the move.

Phones expanded the market for developers by increasing the reachable population of people online and expanding the time each person was spending connected. At the same time, new payment options made it possible for developers to monetize better than they could before.

There was a gold rush. Categories like casual games, which could monetize the new platform quickly and efficiently, grew rapidly. The disruption also allowed several companies, like Instagram and Snapchat, to wedge into valuable positions against Web incumbents.

That rush, however, was relatively short lived, and many if not most of the high-flying app first companies have been wiped out. Today, the list of dominant players doesn’t look all that different from the way it looked pre-app. Facebook, Google, and Amazon dominate their respective verticals — communication, search, and commerce — just as they had at lower-total-scale pre-app.

Over time the legacy of the app rush is likely to be enabling on-demand services like Uber and Postmates by making it easy to manage a distributed geo-located workforce, more than fundamentally changing the dynamics around how people spend their time and money.

The app era certainly enabled some new awesome services. But when you step back for a moment it really didn’t change the fundamental landscape of communication, search, entertainment, or commerce very much. It just increased the reach and depth of the incumbents.

The Next Big Shift: 2016

If the app shift moved developers away from server side development and towards clients, the most important part of the current shift is a move back towards the server and away from client software in the form of bots.

Practically this means that services are being developed in one of two ways. The first is with no client-side software, using things like text-messaging today, and perhaps Facebook and Slack tomorrow, as the user-facing front end. Or, short of that, with very lightweight and simple apps connected to much deeper server-side services.

There are several advantages to this shift. First, and I don’t say this lightly, dealing with installed client software is slow. You have multiple versions of the same software running on different devices, and you have to ship software that cannot be easily recalled for bugs or errors. Startups have a hard time winning at the game (as I have written about before). The bot paradigm is going to allow developers to move fast again.

Second, while phones have certainly gotten more powerful and have more storage than ever before, they’re toys compared to what you can do server side with massive data and processing power. This is a big part of the reason that so many so-called AI companies are springing up. They will be the arms dealers to bot developers.

Third, services with bots on the front end can, in theory, be far more personal than apps. Apps that are downloaded and installed are broadly the same for all users. Sure, the content inside the app might be personal, but the layout, functionality, and context of remotely installed software is very hard to customize for each user. Bots are different. In theory they are infinitely more personalizable because you aren’t moving interface around, you are customizing content and service.

At the same time, it isn’t all gravy for developers in bot-land.

First, the fact that bots aren’t installed so much as messaged is a double-edged sword for developers.

Taking away the installation step makes it much easier to quickly start using a bot versus the heavy install process on apps. This will reduce the friction in getting users to try new things.

The negative part is that with apps, if a developer makes it onto a user’s home screen, they can develop a direct relationship with the user. Bots have a much harder time getting to that point to the extent they sit on top of messengers and can’t occupy as prominent screen real estate.

Second, the platform business-dynamics around bots is yet to be determined. The specific rules that get set for bots will matter a great deal in defining whether they become a vibrant ecosystem or a failed backwater.

There are two possible extremes to this. On one hand, companies like Slack and Facebook could decide to play kingmaker in various verticals, just as their Asian messaging counterparts have done. Doing this could drive more profit directly to the platforms and might actually provide better, more deeply-integrated services. But it would also kneecap a lot of innovation.

The other extreme would be a bot-free-for-all, which would lead to a lot of innovation, but also probably a lot of user issues around quality of service and privacy.

The likely outcome is almost certainly somewhere in the middle. The platform companies have too much history and experience to make the mistakes of either extreme. But the reliance of developers on single platforms and the ability of those platforms to change the rules and distribution characteristics for apps will leave developers on edge for the foreseeable future.

Fin.

There are people that like to talk about the bot space, or the “conversational app” space. I think this is ridiculous. Bots or conversational apps is not a “space.” It is a broad paradigm which describes a direction that developers are moving in.

There are conversational apps that are focused on shopping. They will compete with Amazon and other commerce platforms that will, in time, move towards the conversational space when it becomes important to their business.

There are conversational apps that are focused on travel, helping you figure out where to go, how to get there, where to stay and what to do. They will compete with Travelocity and Tripadvisor, and even Airbnb, which will build their own conversation interfaces too.

There will also be conversational apps focused on entertainment, news, and basically every other vertical that currently exists in the app store.

I’m part of a small group working on a conversational agent. It is called Fin, and it is in the search and information space.

We use a combination of machine and human intelligence to find answers, send messages and remember everything for you.

You use it like Siri, Echo, or Google Now. But because we use people, Fin gives you an answer every time rather than occasionally, and can handle natural human requests that go beyond simple facts.

Are we competing for the entire conversational market? Of course not. We do, however, think that we can make some core experiences you have every day dramatically better.

Conclusion

I hate buying into hype. I would love to say that all of the chatter and attention around conversational experiences and bots is just PR crap.

I don’t think it is. I think it is a fundamental shift that is going to change the types of applications that get developed and the style of service development in the valley, again.

In general, I am very excited. In the optimistic case I think that bots will open up better services for users faster and disrupt some very large entrenched players in a way that the app paradigm never did.

What remains to be seen is not who wins the conversational app space, which is an irrelevantly broad concept but, rather, which big incumbents seamlessly navigate this next shift, and which get taken out by new nimbler developers working in a new way, with new tools.

– Sam

AI and AAI // 28 Jan 2016

The paradigm shift towards conversational / bot interfaces in response to consumer app fatigue has been accompanied by a lot of buzz about “AI.”

All of this buzz (somewhat disappointingly) is just a conflation of the chat interface with “AI” due to the central role that conversation plays in the Turing test for machine intelligence — it’s not the result of any breakthroughs in general intelligence / deep learning that would enable new consumer applications. The exciting “AI” action is still in the realm of research labs like Vicarious or Google’s Inceptionism (perhaps an early version of “computer thought”?).

If we already had AI in the strong sense, Siri (and similar products) would be good. Today, however, Siri et al can really only perform narrow tasks like setting reminders, checking the weather, and dialing phone numbers (and they only get it right about half of the time).

The result is a tantalizing glimpse of the AI powered interface from all the sci-fi (Samantha from Her, HAL from 2001: A Space Odyssey, Jarvis from Ironman), that makes us yearn for this awesome style of interaction even more.

At Fin, we’re tired of waiting for “AI” / software to deliver on the future, so we’re trying to build it with “AAI” — artificial artificial intelligence. We will combine the best of both software and human intelligence to get the results we want — namely, a system that functions like the AI powered ones from sci-fi.

We’re less concerned with modeling pure software algorithms after individual brains, and we look instead to biological models at the species or ecosystem level, where we observe diverse sets of creatures participating symbiotically in a system that exhibits probabilistic tendencies.

With this in mind, we try to design all of the hybrid systems powering Fin to have feedback loops that ensure subsequent interactions tend to be better and better over time (just as we observe systems like evolution of species and survival of the fittest within species trend towards this in nature). This means that when we talk about “AI” at Fin, we don’t mean “artificial intelligence,” but “always improving.”

The practical upshot of our bastardized interpretation of “AI” is a service that delivers human level intelligence and understanding to our users today and over time is constantly learning more about them and getting faster and cheaper as our software systems grow more sophisticated.

If you’re interested in this framework for designing intelligent systems, drop us a line.

– Sam and Kortina

Everything I Have Learned about Making Engineering Performance Reviews Efficient, Effective, and Fun // 23 Oct 2017

When I ran our first performance review cycle for about a dozen engineers a little over a year ago, I had never participated in an official performance review myself, either as a manager or a report.

I was a bit skeptical that the whole thing might be a bunch of corporate bureaucracy, only necessary at large companies for incentivizing armies of employees with invented ladders of meaning.

I wondered why all feedback could not just be in realtime, ad hoc, based on performance wins and successes as they happen.

I was also a bit daunted by having to write reviews for over a dozen people.

Having run several of these now, however, I have come to really value and enjoy our review cycles, and I wanted to write up everything I’ve learned about the process along the way.

TOC (since this is a very long post):

- Getting Started

- The Basic Components

- Upward Review

- Self Review

- Peer Reviews

- Manager Review / Compilation

- Grouping and Summarizing Direct Quotes from Peers

- Commentary on Key Themes and Strategies for Future Growth

- Review Summary and Compensation

- Delivering Reviews

- Final Thoughts

Getting Started

When we spun up our first review cycle, having never done one before, I did what I usually do when I’m in a role doing something new: I interviewed some people who had done this before.

The two people I interviewed were Raylene Yung, an engineering lead @ Stripe (formerly @ Facebook, and also an advisor to Fin), and my co-founder, Sam, who had lots of experience with performance review cycles when he led a large product org at Facebook.

Btw, if you’re an engineering manager, I highly recommend checking out Step 4: Edit and iterate on your outline of Raylene’s post on how she runs performance review cycles. There are some great tips in there for making the review more focused.

Input from Raylene and Sam led to most of the shape of our current engineering review process at Fin.

The Basic Components

Our review cycle for each employee consists of the following:

- a review of your manager

- a self review

- 3 peer reviews

- a review from your manager

We use Google Forms to collect all of the reviews.

#protip Google Forms doesn’t reliably save your progress, so better to write your reviews in a doc and paste the answers into a form, rather than write a long review in Google Forms directly and risk losing your work.

Upward Review

The manager review consists of the following questions:

What did this person do well?

What could this person be doing better?

These are the most open ended of all our review prompts, leaving room for people to give feedback of any kind.

Here’s an example of some good upwards feedback:

Sometimes I wish I understood the process by which certain decisions were reached. In all-hands meetings, I’ve always walked away impressed by how clear and thoroughly reasoned objectives and goals are (i.e., I know why we have that objective and how we chose it vs. others). I don’t always feel this way in more micro-objectives or sometimes I feel that we choose to just “do it all” instead of picking specific things to focus on.

Self Review

The self review consists of the following questions:

Looking back over the last few months, what are you most proud of?

What do you think are your biggest areas of opportunity to grow? What are you hoping to achieve in the next quarter both in your role and as a team member?

Examples of some good answers to the first question:

ML Tagging & the docker pattern for this service: It forced me to learn the infrastructure more and productionalized a tool on a new stack (python), with tests, with a new AWS setup, and more cross-pollination with analytics. Buttoning it up to a point where analytics can build off it, beefing it up with tests, and making it “learn” from new data over time was very very fun.

Or:

We created an environment that was supportive, challenging, and fun, and carved out projects that interns were genuinely interested & excited in — and we will have a very high offer:intern ratio! I applied our learnings from last summer’s intern program and took on a diffuse set of responsibilities that included the coordination of onboarding efforts, guiding interns on project selection & scoping, reviewing PRs, organizing performance reviews, organizing meetings / office hours, and scheduling events (with lots of help from Laura and awesome intern mentors).

Examples of some good answers to the second question:

I’d like to improve code velocity on larger projects. I haven’t taken enough time to write out a plan and get feedback so that I can build as quickly and confidently on larger projects compared to smaller ones.

Or:

I have a hard time knowing when to rewrite vs refactor vs add to the mess. Sometimes the right thing to do is patch the old code, sometimes the big rewrite is worth it. I find it harder to justify the time for the larger refactors, so end up somewhat frustratedly working with the existing architecture.

Or:

Outside of planning and communicating — another big opportunity for me to grow here is to invest more time in doing external research on how companies manage projects or deploy infrastructure — I think that there’s a lot to learn from others on these fronts.

Peer Reviews

I use the term ‘peer’ loosely here. We basically use one form for all feedback from any other team member, so we may collect reviews not only from other engineers, but also from people you are mentoring or people in other functions outside of engineering that you collaborate with.

Some key questions when considering how you want to use peer feedback are whether or not you want to show it directly to people and whether or not you want to show attribution of the feedback.

We choose not to show attribution of specific feedback to reviewees, because we think some feedback people only feel comfortable sharing when there is no attribution, and often, this is some of the most important feedback for reviewees to see.

Although we do not show attribution of feedback to reviewees, we do show attribution of feedback to the managers compiling reviews. This seems like a middle ground that leads to the most feedback being shared and provides the most information to managers who might want to dig deeper on specific issues with authors of some feedback.

Peer reviews consist of the following questions:

When was this person at their best this quarter? What are some shining moments as a product/software engineer? As a teammate? Give concrete examples.

What are some ways this person could improve as a product/software engineer? As a teammate? Again, give concrete examples.

NB: software engineers are not only responsible for shipping code, but also for helping build a great engineering organization, and we explicitly ask everyone to consider this when writing reviews.

Before we send out the peer review forms, we also ask each person this question:

Are there any areas of responsibility outside the scope of day-to-day engineering (eg, on-call, code review, CI, site reliability, security, recruiting, etc) that you are shepherding and want feedback on?

Then, before you write a peer review for a teammate, you lookup in a directory whether there’s anything else they are responsible for that you should give feedback on.

Examples of good peer feedback look like:

I appreciated Rob’s commitment to refactoring the phone call code. It has been a notoriously thorny and difficult feature with a lot of agent pain attached, and he pushed for setting aside time to clean it up when nobody else was pushing for this as a priority.

Or:

As the primary iOS developer, it might be good to think about roping some more people into iOS work. Rob is still a knowledge silo for big parts of the iOS app, which means that more of the feature and bug work on iOS falls on him.

#protip when sourcing specific examples for peer reviews you are writing, it can be helpful to mine data exhaust from collaboration tools to jog your memory. Places to consider include:

- Github Pull Requests

- Code Reviews

- RFCs

- Engineering Blog Posts

- Feature Release Emails

- Post Mortems

- Eng Retro Notes

- KanBan Boards (we use Airtable)

- Slack History

Manager Review / Compilation

Here is the part where I ultimately spend most of my time, and where I think our specific process for reviews adds a lot of leverage.

When compiling formal reviews for each report, I use the following template:

2017-H2 Review

NAME,

I’ll start with some of the feedback from the team.

Praise

GROUPED_QUOTES

Ideas for Improvement

GROUPED_QUOTES

Looking through the feedback from the team and your self review, I’ll offer some commentary.

COMMENTARY_ON_KEY_THEMES_AND_STRATEGIES_FOR_FUTURE_GROWTH

- Kortina

Review Summary

_Meets Some Meets Most Meets Exceeds Greatly Exceeds Redefines_ Grant: __ Shares (standard vesting schedule)

Comp Increase: $_ / yr_

The goal of this template is to focus my time on where I can specifically be the most valuable to the person being reviewed, which is to help them synthesize the feedback, understand from the team and company perspective how they have been performing, and prioritize the most important opportunities for personal growth.

When writing someone’s review, I first paste this template into a new Google Doc, and then at the very top, I paste in all of the praise and critical feedback from peers, as well as all of the self review, from their respective Google Forms summary spreadsheets.

Grouping and Summarizing Direct Quotes from Peers

The first key leverage point is to directly pull quotes from peers and summarize them thematically. This looks like the following:

You’re willing to take on important projects that need love / have historically been neglected:

I appreciated Rob’s commitment to refactoring the phone call code. It has been a notoriously thorny and difficult feature with a lot of agent pain attached, and he pushed for setting aside time to clean it up when nobody else was pushing for this as a priority.

Rob is willing to take on and execute on impactful projects like phone calls, request entries, checklists

#protip formatting is important here, to distinguish quotes from peers from your commentary as a manager.

I try to scrub / cleanup some of the stylistic peculiarities that might unnecessarily reveal the author, but also will include specific details that may in some cases reveal some information about the author, eg, “When Rob and I worked on project X, ….” narrows the scope of potential authors. But, given that people choose their reviewers, there is some amount of pseudo anonymity anyway, and I strive to be judicious about which quotes of this kind I include. Often, there are concrete examples important for the reviewee to hear, where any attempt at transcribing / anonymizing would not dramatically change the amount of information revealed, and I typically find including the details worth the trade.

Commentary on Key Themes and Strategies for Future Growth

After compiling all of the Praise and Ideas for Improvement from peers, I have a thorough understanding of what the team thinks. I then walk through these along with the self review, and address key points, explaining whether or not my opinion matches the team’s or the reviewee’s on a specific issue, and then offer some suggestions for improving on that point in the future.

This might look something like:

I don’t think you need to be responsible for fixing every iOS bug, but given that historically you have been the point person for iOS, I don’t think anyone else feels empowered to update the process here. At the very least, I’d setup a better / more transparent system for tracking iOS bugs and time to resolution, but even better, float the idea by a few others on the team of having them help out with fixing the iOS bugs and doing some of the cards.

After offering this detailed play by play of the peer and self reviews, I add some higher level thoughts of my own about how each person can grow or take on more responsibility.

For example:

Second, a place you could start taking on more responsibility / ownership is getting more involved in and vocal about staffing infrastructure projects. There has been some concern from the team that we are spending too much time on dev tooling projects (and some concern from others that we are not investing enough in dev tooling or fixes to key systems that would enable everyone to move faster). From my perspective, it feels like we have had a nice mix of work in flight, but in many ways I don’t feel as close to the technical needs as you are, so it would be great to have another judicious opinion from you about when we are spending too much or too little time on infra work.

Review Summary and Compensation

Finally, I’ll fill in the review summary.

We use the following rating scale:

- Meets Some: Serious gap between performance and expectations. Almost certainly entails a performance improvement plan.

- Meets Most Not performing to level of expectations. Problematic and requires concrete improvement.

- Meets: Great work. We have high expectations so this is a solid rating.

- Exceeds: Great work that exceeds all expectations, with respect to things like depth or breadth or sheer volume of contribution.

- Greatly Exceeds: Exceeds all expectations, to a surprising extent, eg, has accomplished a shocking amount of work relative to the rest of the team given their level.

- Redefines: Performed industry changing work: for a senior engineer, this might be pushing an open source project that Facebook ditches React for.

It’s important to note that the rating is based on expectations for the individual, so it’s a moving target, harder to get higher scores the better you are.

In addition to the rating, once per year we do comp adjustments. (Sidebar: it’s really liberating for both reports and managers to do comp adjustments only once per year, rather than constantly diving into comp negotiations ad hoc. It also results, I believe, in more fair treatment across employees, rather than simply benefiting the more vocal, as you can imagine might happen otherwise).

There are 2 components to comp adjustments, equity and base cash compensation increases.

We do equity ‘refreshers’ each cycle, granting more equity to employees each year they work at the company. This equity is subject to our standard vesting schedule, and the way we roughly think about it is “were we to hire this person today, what would their initial offer package include as an equity grant?” The other thing we consider when sizing equity grants is the amount of progress we have made as a company, the goals we have hit, and the questions we have answered about operations or product market fit. Many other startups might only do equity grants as part of offer packages, and then offer more equity only once the vesting for the initial grant completes (if at all). We think, however, that committing to regular annual equity refreshers results in more fair compensation for all employees in the long run.

While the ratings are based on expectations for the individual given past performance and their individual level of expectations, compensation is more grounded in how an individual contributes value to the team and company goals relative to the rest of the team.

There are two key principles governing our basic philosophy around compensation. (1) Since the team comes to work everyday ultimately determines the success of the company, they should be well compensated and dealt in to the financial upside of the success of the company. (2) If everyone were to see everyone else on the team’s comp, everyone should feel good about the fairness of it.

Delivering Reviews

It usually takes me about a day to compile all of the reviews (I can do one in 45 minutes or so), then I meet with everyone in person to discuss them.

I use Fin to schedule 45 minute meetings with each report (spread over 1–2 days).

#protip It’s important to sit side by side with someone in 1:1 meetings between reports and managers. Sitting across a large table can create an adversarial atmosphere.

I ask each person to bring their laptop with them to the review meeting, and I don’t send them the review until they walk in. I imagine myself reading a formal review, seeing things I don’t understand or maybe don’t agree with, and then losing sleep over it until the opportunity to discuss it, so I try to just save everyone some of this stress.

Once someone is in the meeting, I then share the Google Doc with them and tell them, “Take your time reading through. I’ll reread again while you read it, and then we can discuss everything once you are through.”

Then, once they finish reading, I ask, “Is there anything you have questions about? Things that are surprising? Things that resonate?” They talk through any of these questions or concerns, and I offer more context about the feedback and try to help them understand and prioritize what I think is the most important set of takeaways for them to grow and succeed.

Sometimes these meetings can take twenty minutes, and sometimes they can take up to an hour. I find forty-five minutes is usually more than enough time to budget, however.

Final Thoughts

I’m generally very wary of any form of process, as it can easily just add overhead or over optimize focus on metrics to the point of forgetting top level goals.

But process done right can also be a tool that automates repetitive details and liberates you to focus mental energy on things which most require your attention.

Given our current team size and stage of company (and I underscore this premise, because process should always be evaluated and reevaluated given its context), I find our review process consistently helps me learn about the needs of individual team members, extract broader themes and team needs from patterns across reviews, and leaves me really excited about the quality of our team and our potential to do great things going forward.

If you run your review process differently or have any other thoughts or reactions based on this document, I’d love to hear from you. I generally learn a lot from talking shop about eng org stuff like this.

Also, email me if our engineering team sounds like one you’d be interested in joining. We’re hiring.

If you’re interested in working with us, check out Jobs at Fin.

– Kortina

Building Hybrid Intelligence Systems - 2017 Fin Annual Letter // 20 Nov 2017

We started Fin with the premise that mixing human and software intelligence could produce a service better than any pure software ‘assistant’ like Siri or any individual human. (Btw, if you’ve never heard of Fin, it’s a service that provides high quality, on-demand assistance.)

Along the way, we’ve discovered that while hybrid intelligence systems can give you the best of both worlds, they can also give you twice the challenges you might have when dealing strictly with humans or with software alone.

We have learned a ton in the past year, and wanted to share some of the key lessons that have given us more confidence than ever that in the near future most work will be performed by human + software hybrid systems like this one.

Sections

- Shared memory tools make teams smarter and better than any individual can be alone.

- Checklists help even the best humans get things right.

- Using personal context to do the ‘right’ thing for each customer is table stakes for doing work as high quality as a human assistant would.

- Leverage data to reduce variance in human systems.

- Computers are better at math than humans.

- Humans are the universal API.

- Closing thoughts: hybrid intelligence systems should outperform pure software and isolated individual humans.

1. Shared memory tools make teams smarter and better than any individual can be alone.

We operate 24 x 7 x 365, and there is no guarantee that every request from a particular user (or even each part of a single request) gets routed to the same human operations agent on our team, so we can’t rely on any knowledge being ‘cached’ in a person’s brain.

And, because Fin is totally open ended (customers can ask for anything from “can you send flowers to my mom?” to “can you investigate how much it would cost to buy this racetrack?”), we cannot possibly train everyone on our operations team on every kind of request a customer might send in — we can’t even enumerate all the kinds of things someone might ask.

Consequently, we have invested deeply in tools for sharing knowledge about each customer, about each specific request as it gets handled by many people throughout its lifecycle, and about each kind of request the first time we perform an instance of it (eg, buying flowers).

There is some upfront cost to maintaining this ‘shared memory’ for our operations team, but this year we have started to realize many of the advantages we hoped it would pay off with some scale:

(i) Because there is not a 1:1 mapping between customers and operations agents, your Fin assistant never sleeps, gets sick, or vacations and is available 24 x 7 x 365.

(ii) Likewise, Fin can work on many requests in parallel for you, unlike a single human assistant.

(iii) While an individual human assistant only knows what they know / what they learn, Fin’s shared memory entails that any time one agent on the Fin team learns something new about the world or about a best practice, everyone else on the team instantly ‘learns’ or ‘knows’ the same thing, because the knowledge is encoded in our tools. So, for example, when one agent learns a new phone number for making reservations at a tough to book restaurant, or a more efficient way to book rental cars, or a great venue for a company offsite, or a cheaper place to get a certain product, every other agent and every customer of Fin benefits from this knowledge.

These network effects that result from our shared memory approach make the Fin team collectively more knowledgeable than a single individual member could be on their own.

2. Checklists help even the best humans get things right.

All of the knowledge our operations team indexes about best practices, about customers, their preferences and relationships, and about the world is useless if we cannot find it and apply it at the right time.

Because Fin’s shared memory is constantly changing, we cannot simply train operations agents on everything before they start working. So, we store this information in a wiki-like format with a search index on top of it, where any agent can lookup a document with the best practices for any kind of request or find relevant information about a customer on the fly.

This database and search index are not sufficiently reliable on their own, however, because it is easy for someone to miss a key step in a particular workflow when they only read through a how-to document. Or, even if they had thoroughly learned a workflow at some point, their knowledge may have become stale.

Over the past year, we have migrated much of our process knowledge into checklists, which, as The Checklist Manifestofamously describes, help even the most highly skilled professionals like surgeons and pilots dramatically reduce error rates. Our checklists ensure that operations agents do not miss any key steps in known workflows as they handle requests.

But, while surgeons and pilots know ahead of time a few specific checklists they need to use, we have hundreds of checklists comprised of thousands of steps because of the breadth of work Fin does. This means that finding the right checklist to use (if one exists) is another problem we need to solve.

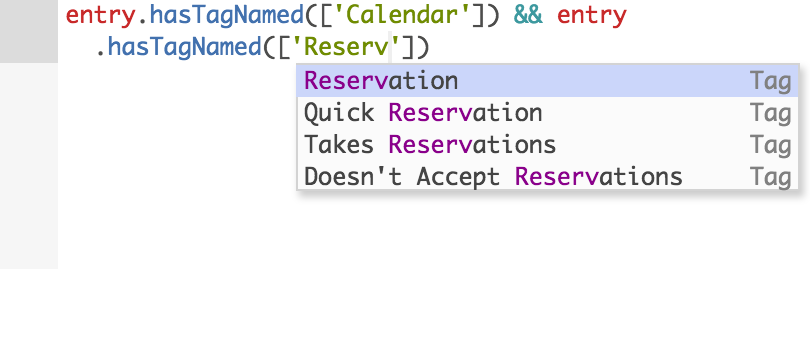

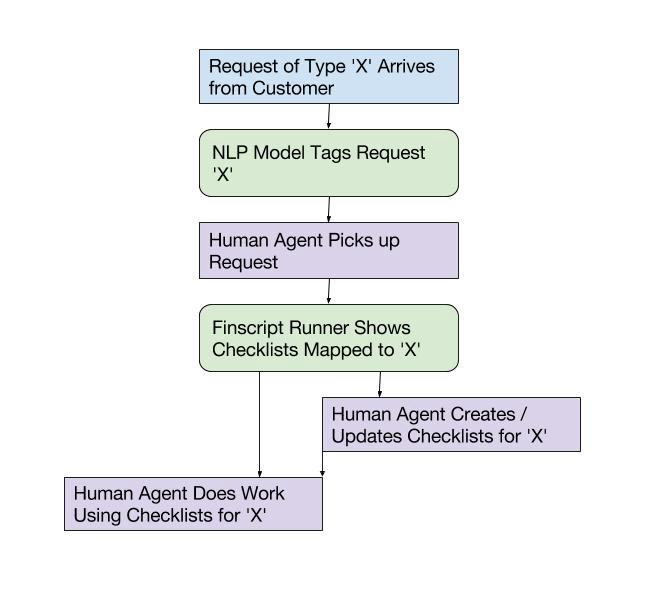

In addition to curating the content of checklists, our operations team is also responsible for managing the rules around what checklists to use when. They author these rules in a variant of javascript we call Finscript.

We have some NLP models that tag each request, and because our operations team has mapped each tag to the relevant checklists with Finscript rules, by the time a human agent picks up a request, the relevant checklists are already in front of them, so they don’t have to search for the correct checklist to use (or even be aware that it exists).

3. Using personal context to do the ‘right’ thing for each customer is table stakes for doing work as high quality as a human assistant would.

Probably the most critical type of knowledge stored in Fin’s shared memory is user context.

When you work with a great person for many years, this person gets to know all of your nuanced preferences — your communication style, price sensitivity, prioritization framework, when to take initiative vs. confirm something, the important people and places in your life, etc.

Acquiring this sort of deep knowledge of each customer is table stakes if Fin is going to be as good as (or better than) a human assistant. (As an aside, the atomic treatment of every request without any sort of memory / context is one of the most frustrating things about talking to many pure software voice assistants currently in market — you can’t yet say, “Alexa, can send Rob a link to that bucatini I ordered last week, and get me a few more bags?” Instead, you need to fully specify the parameters of every request each time you interact with Siri or Alexa.)

Over the years, Fin has gotten to know many dozens of nuanced preferences about me, like:

- Default duration for work calls: 25 minutes.

- Default spot for morning coffee meetings: Jackson Cafe.

- Do not make reservations at seafood restaurants — Kortina does not eat fish.

- When booking Barry’s Bootcamp, book Treadmill first.

- Use Amex –6000 for all purchases.

- etc, etc, etc…

Likewise, Fin has learned tons of other important context about me that you wouldn’t necessarily call ‘preferences’

It is by storing all of this knowledge in Fin’s shared memory that any agent who picks up a request from me can know that when I say ‘Rob’ I mean ‘Rob Cheung’ or when I say ‘nopa’ I mean the restaurant and not the neighborhood. (Relevant user context and preferences are, btw, surfaced automatically with Finscript in the same way that relevant checklists are, so an agent does not need to know to look for them.)

All of this context is just as critical to Fin doing the right thing when I ask for something as the preferences I explicitly enumerate to Fin as such.

4. Leverage data to reduce variance in human systems.

We have an internal principle: ‘AI’ at Fin means Always Improving. We try to design systems with reinforcing feedback loops that would self improve given no new energy injected from external sources (eg, product development, tools, talent, money).

Adhering to this principle proves difficult given the vast breadth of heterogeneous work we do.

We start to make the problem tractable by measuring absolutely everything that happens in the Fin ecosystem, but even with the many terabytes of data we’ve captured in our Redshift cluster, it has at times been difficult to answer questions like:

- How does our quality of recommendations today compare to 4 weeks ago?

- How does our speed on scheduling requests compare to last week’s?

- Did we spend longer on this request than we should have?

The difficulty in quantifying answers these questions is due to request variance:

- Category of request (eg, research vs. scheduling)

- Complexity of request within the category

- How clearly the request was specified by the customer

- How many round trips the request took to complete

and also due to a vast array of other dimensions and factors like:

- How many new operations agents were onboarded in the past few weeks? What percentage of the entire population did they comprise?

- Was this work performed at the end of someone’s shift or the beginning?

- Was this work performed on a redeye or a daytime shift?

- Was the person performing this work having a bad day?

- Were there any known technical glitches or service latency on a given day?

- Was there some external latency (eg, an outage from an external service provider or long hold times for a new product release or restaurant opening)?

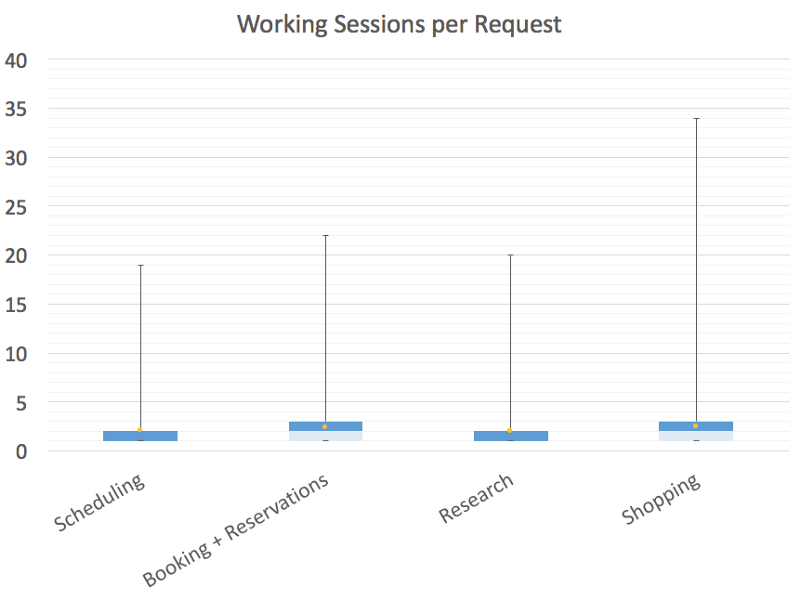

People ask us all the time (i) why we can’t charge a flat rate for requests of a given type or (ii) what the average charge is for a request of a given category.

We would love to charge a flat rate per request (and in the past we experimented with charging a flat monthly rate). The problem with this approach is that the high outliers, either in terms of request complexity or heavy usage users, drive up the averages quite high, and the effective rates for the most frequent requests or typical users would be more expensive under a flat rate model.

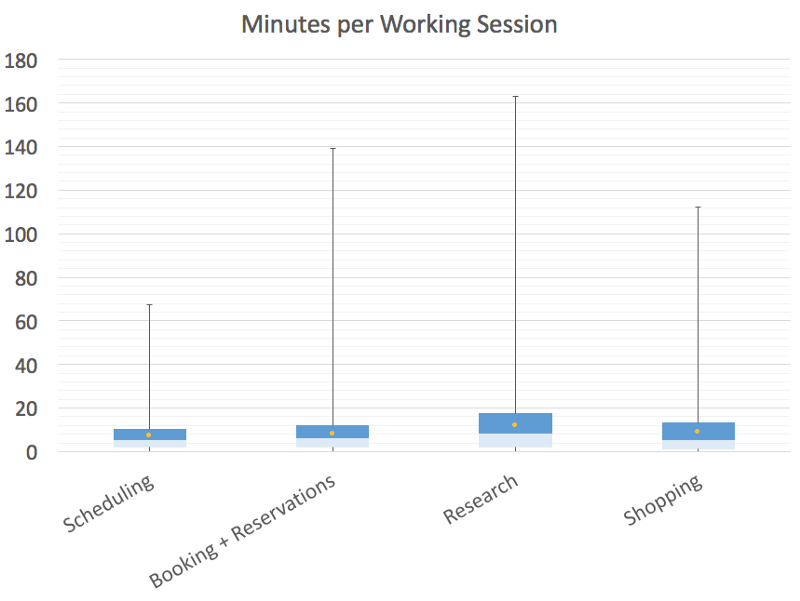

You can see this by looking at some of the complexity distributions for work we do, both in terms of number of round trips or working sessions to complete a request, or in terms of minutes spent per working session, across a few common categories. In all of these cases, the average falls way above the median.

What’s not great about this from a customer perspective is that it makes the price for any given request unpredictable: historically, your price would vary based not only on the complexity of work, but also all of the external and environmental factors I listed above, like who specifically worked on your request and what kind of day they were having.

One of the areas we invested deeply over the past year was making pricing more predictable by smoothing out variance due to all these environmental factors. By studying thousands of requests of different types and complexity, we recently were able to update our pricing to essentially charge for the average time we have observed that a request should take, removing environmental factors like who did it and when.

This has resulted in much smoother, more predictable pricing, which now is mainly a function of the complexity of work requested.

5. Computers are better at math than humans.

This one is a bit tongue in cheek, but worth mentioning since we frequently talk to people outside the company who think that Fin operates entirely on some extremely sophisticated NLP / deep learning / black box software that can perform far more complex tasks than Siri.

In reality, while we have a few language models running that automatically categorize requests and analyze customer sentiment, the models that are the most fundamentally valuable to our business are more numerical.

One example is the model I mentioned in (4), which looks at all the work that happened on a request and determines the complexity in terms of how many minutes the work should have taken to complete. Before we had this model, we had a purely manual quality review process, part of which asked the question, “how long should this request have taken to complete?” Having reviewed hundreds of request transcripts myself, I can personally attest that our model is far more accurate (and far faster) at answering this question than I am.

Another place we lean heavily on software is scheduling. We have one set of models that predicts how much work do we expect customers to demand for each hour of each day of the next 4 weeks. Then, we have another model that takes this as input, along with other parameters describing a pool of operations agents available for work, each with unique constraints and preferences. This second model generates the optimal schedule given all of these inputs, and does a far better job at it than when one of our operations leads tried to do this with an Excel spreadsheet back in the early days.

6. Humans are the universal API.

This one is worth mentioning, because it’s the main reason Fin is able to handle such a wide breadth of tasks.

While we would certainly love to automate things like purchasing things or making restaurant reservations, there are no programmatic APIs that we could use to do most of the work that our customers ask for. Not even all of the restaurants we book are on OpenTable.

One of the things that makes Fin as capable as a human assistant is that we can use the public internet, we can email or text anyone, or we can pickup the phone and call people to get things done for you.

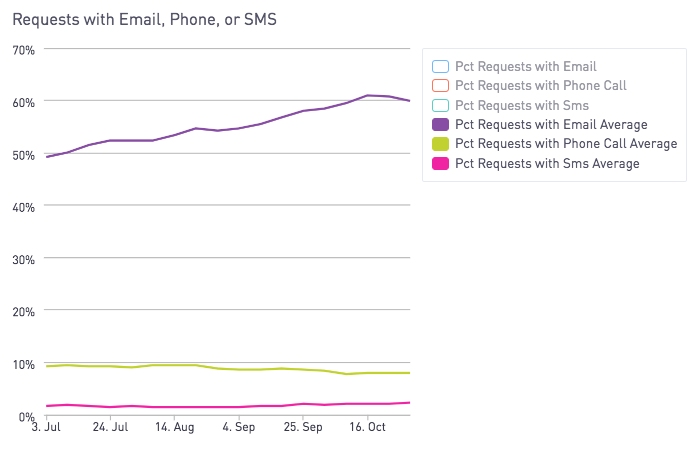

About 60% of requests involve emailing someone, 10% involve a phone call, 2% involve sending an SMS, and nearly every request involves using some internet service outside Fin.

This fact alone entails that there are a huge number of things Fin can do that a pure software assistant won’t be able to do for a very long time, if ever.

7. Closing thoughts: hybrid intelligence systems should outperform pure software and isolated individual humans.

We believe that hybrid systems that leverage great software and a network of humans with shared memory are the future of work. These hybrid systems should outperform what either pure software or isolated individual humans are capable of, on a number of vectors, like cost, efficiency, speed, availability, etc. Just as much computation has moved from self-managed hardware to networked clouds, we believe many other types of work today performed by individuals will migrate to hybrid systems like Fin in the coming years.

Looking back over the past year has been a fun exercise for us, and hopefully gives you a bit more of an understanding of what you can expect from Fin if you’re already a customer (or inspires you to give it a shot if you have not already).

If you’re interested in trying out Fin as a customer, signup here.

If you’re interested in joining our amazing operations team, apply here.

If you’re interested in joining our engineering team, apply here.

If you’d like to come meet our team and talk about hybrid intelligence systems at our holiday party, rsvp here.

– Kortina

Intelligent Work Routing, Dedicated Account Managers, and New Feedback Channels // 13 Feb 2018

Today, we are excited to announce a number of updates designed to level up the overall quality of your Fin experience.

Boosting Speed and Quality with Intelligent Work Routing

To ensure that every Fin request is completed on time, our systems must (i) predict customer demand for any given hour of the day and day of the week, (ii) ensure we have enough people staffed to meet demand at any given time, and (iii) rank which request should be picked up next relative to other requests of varying priority. Historically, by far the most important factor in deciding which piece of work gets done next is the urgency of a request — calling a restaurant to make a dinner reservation for tonight must be done before booking a flight that’s six months out, for example.

Now, however, we have enough volume coming through our systems that whenever someone on our team becomes available to work on something new, there are a number of requests of equal priority ready to be picked up. This gives us the opportunity to start doing cool things to match each piece of work with the best person to handle it. We can match a person with work based on their skills or their familiarity with a certain domain or location or customer. We’ve seen up to 10–20% improvements in the speed and quality of work done based on this intelligent routing, which translates into better service for customers.

Dedicated Account Managers for Every User

One of the benefits of using Fin vs hiring a single human assistant is leveraging the benefits of our entire operations team — they are available to help you 24 x 7 x 365, work on many requests in parallel, and learn new skills and things about the world faster than any one person can.

Starting today, we are also giving each Fin user access to a dedicated Account Manager. You can go to them for help and support, questions, or feedback on any request that you weren’t 100% satisfied with.

Expect an email introduction from your Account Manager today.

New Feedback Channels

For quite some time, you have been able to submit feedback at a per request level through our iOS app, but there has not been a great way to give feedback on email requests (which comprise a large percentage of our work).

Starting this week, each time we complete a request, we’ll email you a link to a feedback form you can use to rate the work done with 1–5 stars. We’ll be turning this feature on gradually for groups of users over the next few weeks.

We anticipate all of these updates will lead to an overall higher quality service for every customer. If you have any feedback on these updates or anything else, let us know! (And if these sound like interesting problems to work on, we’re hiring).

– Kortina

Improving Operations Quality through Measurement and Performance Management // 15 Apr 2018

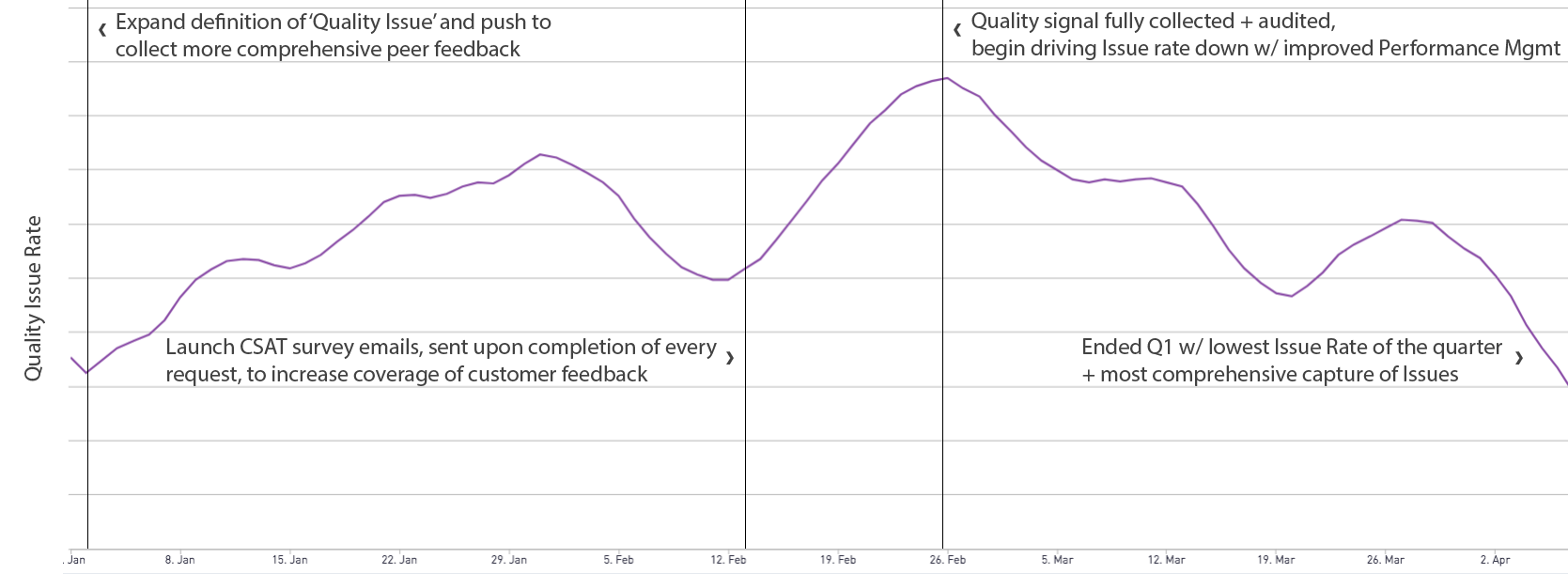

Earlier this year, I wrote about some changes we were making at Fin to level up the quality of our service. This included providing a Dedicated Account Manager for every customer, as a well as a bunch of updates to our internal metrics and processes. In this post, I want to talk a bit more about improvements to service quality that resulted from two of these changes: (i) more comprehensive and accurate measurement of quality signals and (ii) better ‘windowing’ of metrics to accelerate performance management.

How do you accurately measure service quality?

A few common methods for measuring quality of service for a team include CSAT and NPS. CSAT can be useful for gauging relative quality (either comparing the average performance of different individuals on a team, or comparing your company to another in a similar category). NPS is commonly used to predict customer loyalty and historical trends in the quality of a service or product over time.

Because both of these methods rely on customer surveys and provide only a sampling of customer sentiment and feedback, neither has 100% coverage that alerts you of all poor customer interactions. You must assume that many customers who had a poor experience will not take the time to provide you feedback. Furthermore, sometimes a customer may subjectively label an interaction poor when it’s objectively not a failure. (NB: This may be the result of mismatched expectations, which is arguably itself a kind of failure if you subscribe to ‘customer is always right’ doctrine, but that is outside the scope of this post.)

Completely automated systems (like a sentiment analyzer, eg) may give you broader coverage than user submitted surveys, but also may not be 100% accurate.

Peer review can potentially provide broader sampling than customer surveys, and it may have a more stringent definition of failure that catches some problems that users would miss. But when peers doing the review have team goals that conflict with providing negative feedback to their teammates, you may run into challenges with incentives.

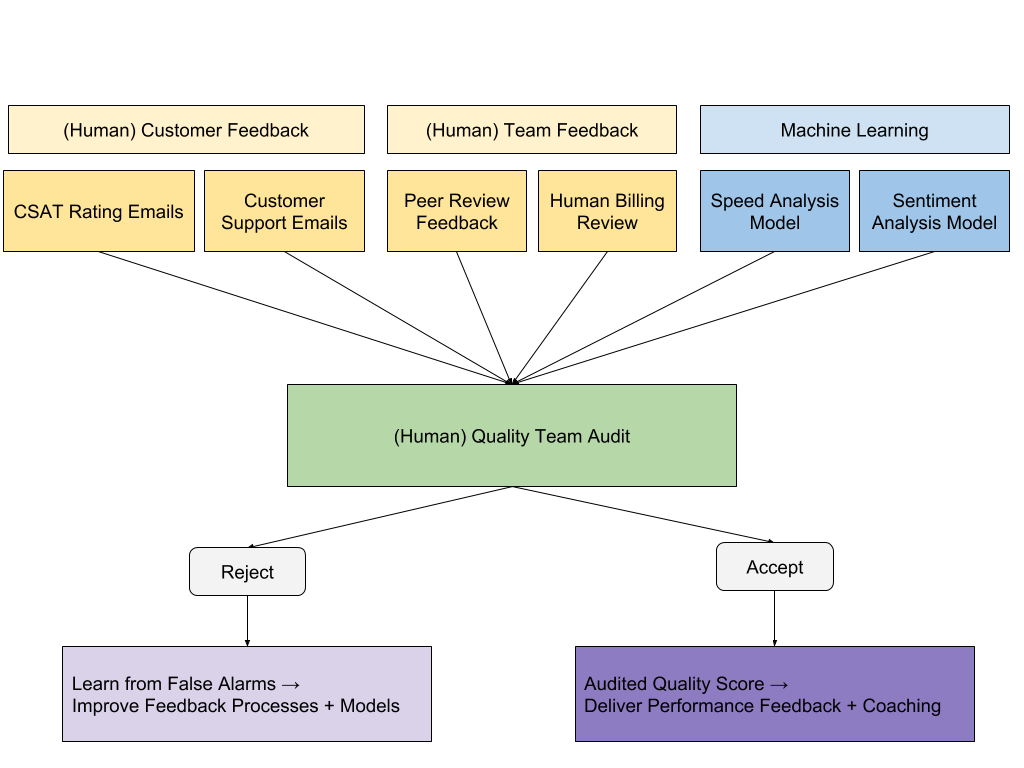

Given that each of these systems for collecting quality signal is imperfect in some way, we have found the best way for scoring quality is to combine and audit all the signals. Here is an overview of how our current quality scoring works:

We want to cast as wide a net as we can and so that we limit our chances of any sort of quality issue escaping opportunities (1) for correction before it negatively impacts a customer and (2) for delivering feedback to the person(s) responsible.

At the same time, since we are managing to quality metrics, we don’t want to penalize anyone by decrementing their stats because of a subjective customer response or false alarm of an automated system like our sentiment analysis model. So, we feed all the various quality signals we collect into a (human) audit process to sift the real issues from the false alarms. To mitigate challenges of peer review that I mentioned above, we have created a new dedicated Quality Team that works with Account Managers to perform this audit.

Improving Performance Management by Tightening Metric Windows

Once we were confident we were identifying as many quality issues as possible with our new metrics system, we set aggressive goals for our operations team to drive these down to an acceptable rate.

The most impactful change we made to help the team move towards their goals was tightening time windows for key metrics.

As most startups are probably familiar with, it’s critical to choose the right time window for each metric: you basically want the smallest time window possible which allows for high enough sample sizes that your metric is relatively stable and free of noise and variance. A tighter time window means you can run experiments to get significant results and feedback faster (and ultimately learn and improve faster). So, daily user counts are better than weekly active counts which are better than monthly active counts, provided your per user activity frequency and total number of active users support the tighter windows.

The same holds for performance metrics for individuals – tighter windows allow for faster feedback and improvement.

An opportunity we identified early in the quarter was that almost all of our agent performance metrics were pegged to 4 weeks, which meant 4 weeks to identify when someone needs a performance improvement plan, and then 4 weeks to determine the outcome of that plan.

When midway through the quarter we talked about driving results by the end of the quarter, it became clear this feedback cycle was way too long. So for each of our key performance metrics, we asked ‘how tight can we make the window for this metric to collect enough data to accurately measure it?’ For many important quality metrics, that window was now 2 weeks. That meant for a set of metrics, we could identify the need for a PIP based on only 2 weeks of data, and someone could successfully pass within 2 more weeks. This doubled the speed of our performance feedback loop, from 8 weeks to 4 weeks.

It had the additional benefit of empowering each individual on the team to more quickly understand how changes in their workflow, incorporation of coaching, and attention to detail translated into better results, because it is far easier to move a 14 day average metric with a single day of hard work than to move a 28 day average. Seeing the results of their efforts reflected in key metrics more quickly was a big psychological boost to the team.

Takeaways

This chart visualizes these effects over the quarter. You can see our Quality Issue Rate spike up early in the quarter, when we launched more comprehensive peer review systems and CSAT email surveys, capturing signal that previously went unmeasured. Then, the Issue Rate steadily marched down as we made improvements to our performance management process:

Given the breadth and complexity of the work Fin does, we’ve found very few one size fits all answers. This holds both for various methods of measuring quality, as well as for time windows for different key metrics. Ultimately, ensuring the success of our customers hinges upon our ability to measure and drive the quality and efficiency of operations, so we are constantly on the lookout for new, more accurate, and more comprehensive opportunities for measurement.

ps. If you work in operations or retail and are interested in performance management at scale, we’d love to hear how you think about these challenges.

– Kortina

Finding the Right Person for the Job: Matching Requests of Different Types to Workers with Different Skills // 12 Jun 2018

Here at Fin, as with any large group, our agent team is a heterogeneous mix of people with different types of skills. As we scale our operations team, we want to ensure that incoming requests are always being routed to the agents most capable of completing them quickly and effectively. What follows is a walkthrough of how we capitalize on agents’ differing skill sets to ensure that agents are always working on the types of tasks that they are fastest at.

Routing Work Based on Expected Time-to-Completion

After an agent completes a task, if there is still work sitting in our queue waiting to be picked up (which there inevitably is), then our router has to decide what piece of work to feed that agent next. The router takes into account a number of different variables when making this decision, such as when the request was first made, or if there are any externally-imposed deadlines on the work, e.g. needing to buy a plane ticket before the flight sells out.

All of these other, more pressing considerations being equal, we would then like to preferentially route tasks to agents who we think will be able to complete that work in the shortest amount of time. For each type of task, we have data on how long it took a given agent to complete that type of task in the recent past, and we would like to use this information to determine whether that agent will be significantly faster (or slower) than their peers at completing that type of task in the future. If we can be reasonably confident that an agent will be faster (slower) at completing a certain type of work than their peers, then we should (shouldn’t) route that piece of work to them.

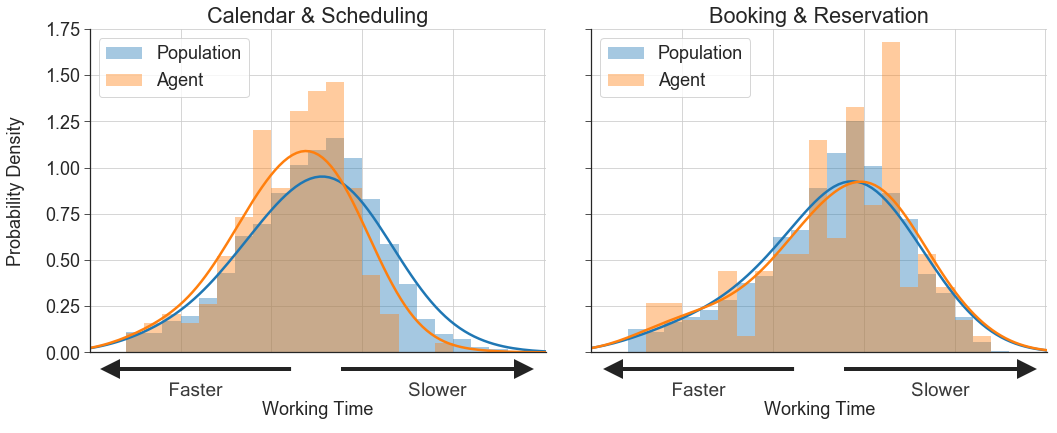

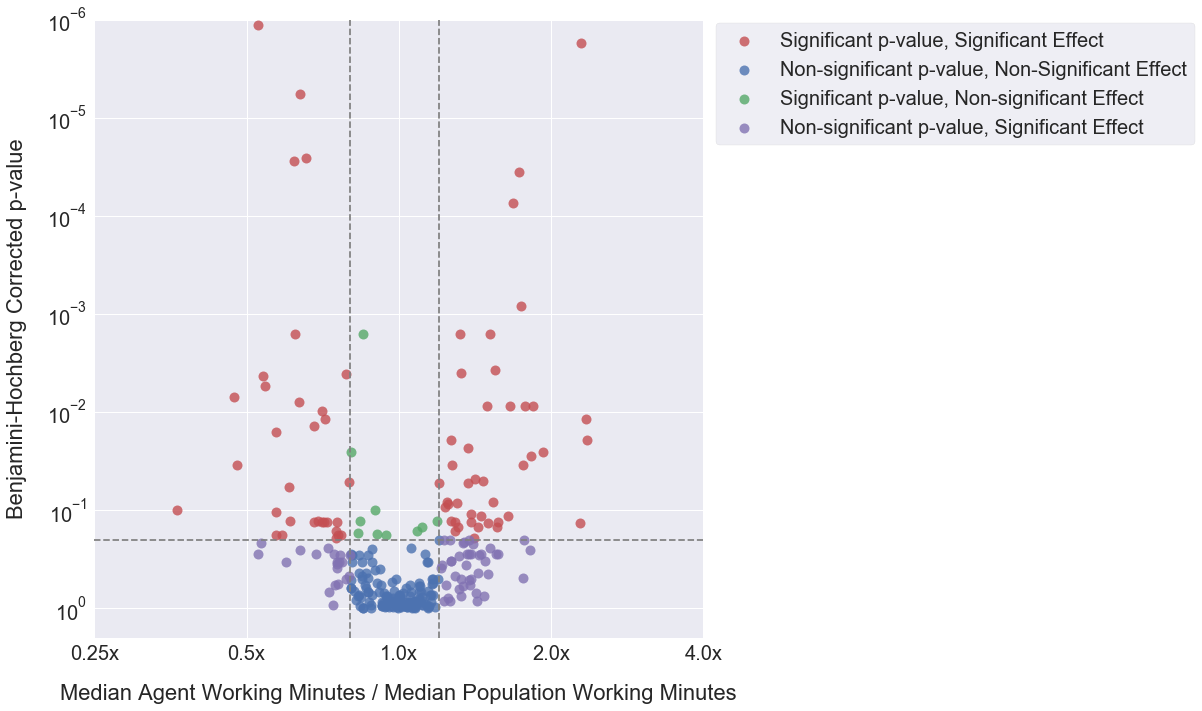

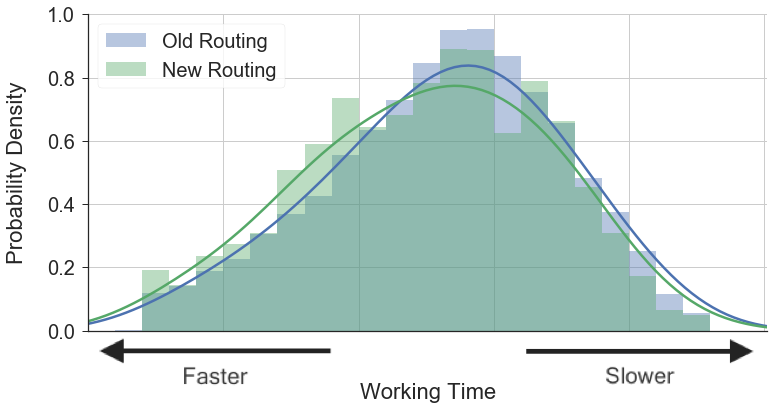

The agent in Figure 1 is significantly faster than the rest of the team at Calendar & Scheduling tasks, but performs at roughly average speed on Booking & Reservation tasks:

Figure 1. Amount of time it takes a particular agent to complete two different task types compared to the population.

Statistical Hypothesis Testing

Here at Fin, as with any large group, our agent team is a heterogeneous mix of people with different types of skills. As we scale our operations team, we want to ensure that incoming requests are always being routed to the agents most capable of completing them quickly and effectively. What follows is a walkthrough of how we capitalize on agents’ differing skill sets to ensure that agents are always working on the types of tasks that they are fastest at.

Routing Work Based on Expected Time-to-Completion

After an agent completes a task, if there is still work sitting in our queue waiting to be picked up (which there inevitably is), then our router has to decide what piece of work to feed that agent next. The router takes into account a number of different variables when making this decision, such as when the request was first made, or if there are any externally-imposed deadlines on the work, e.g. needing to buy a plane ticket before the flight sells out.

All of these other, more pressing considerations being equal, we would then like to preferentially route tasks to agents who we think will be able to complete that work in the shortest amount of time. For each type of task, we have data on how long it took a given agent to complete that type of task in the recent past, and we would like to use this information to determine whether that agent will be significantly faster (or slower) than their peers at completing that type of task in the future. If we can be reasonably confident that an agent will be faster (slower) at completing a certain type of work than their peers, then we should (shouldn’t) route that piece of work to them.

The agent in Figure 1 is significantly faster than the rest of the team at Calendar & Scheduling tasks, but performs at roughly average speed on Booking & Reservation tasks:

Figure 1. Amount of time it takes a particular agent to complete two different task types compared to the population.

Statistical Hypothesis Testing

The question that we’re asking here, namely, which agents differ significantly from the population in terms of how long it takes them to complete a given task type, is highly amenable to traditional hypothesis testing. The hypotheses that we are trying to decide between are:

- H0: A piece of work from category C completed by agent A is not more likely to be completed faster (or slower) than if that same piece of work were completed by another randomly-selected agent from the population

- H1: A piece of work from category C completed by agent A is more likely to be completed faster (or slower) than if that same piece of work were completed by another randomly-selected agent from the population

For a specific agent A and category of work C, we can answer this question using the Wilcoxon Rank-Sum Test, which can be called in Python using:

from scipy.stats import mannwhitneyu

statistic, pvalue = mannwhitneyu(agent_durations, population_durations, use_continuity=True, alternative='two-sided')

view rawmann_whitney_u_test.py hosted with ❤ by GitHub

Controlling for Multiple Hypotheses

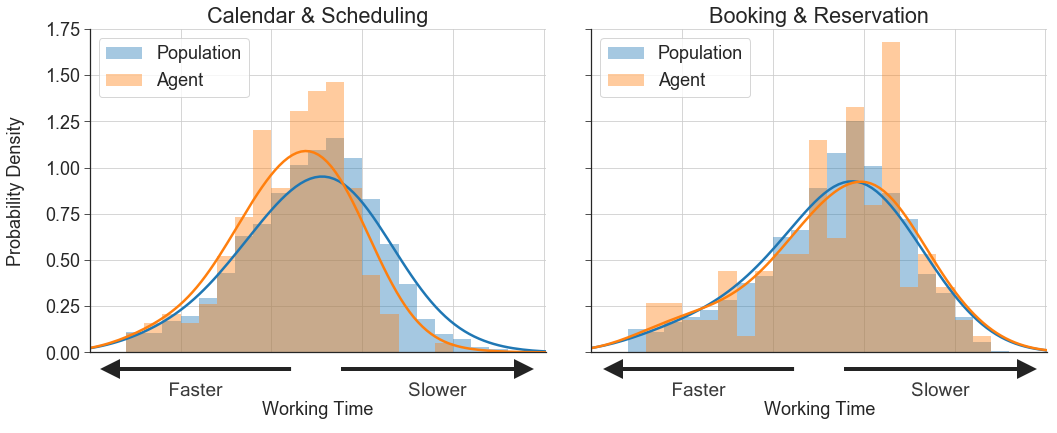

If we simply apply the above test to every agent/category combination, and deem each test significant if its p-value is below the predefined Type 1 error rate cutoff, we will be dramatically inflating our true Type 1 error rate by virtue of having tested hundreds of different hypotheses. The webcomic xkcd illustrates this problem very nicely in the comic below.

Figure 2. xkcd, warning the public about the dangers of multiple hypothesis testing since 2011

Broadly speaking, there are two approaches to correcting for multiple hypothesis tests:

- Control the Family-wise Error Rate (FWER): Limit the probability that any of our tests conclude that there is a significant difference when none exists

- Control the False Discovery Rate (FDR): Limit the proportion of our tests that conclude that there is a significant difference when none exists

In the above xkcd comic, the scientists should have controlled the FWER, as the cost of falsely alarming the public about a nonexistent health hazard is very high. However, in our case, the cost of a false positive is much lower; it just results in us routing work suboptimally.

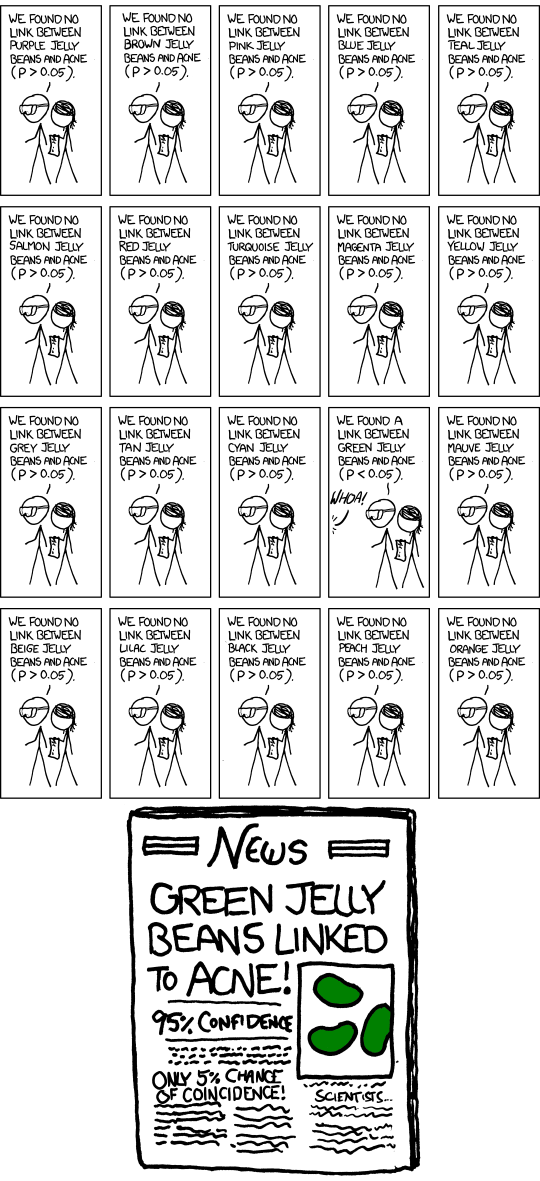

For our purposes it is sufficient to control the FDR such that at most 20% of the null hypotheses we reject are false positives. This can be accomplished by using the Benjamini-Hochberg (BH) procedure, which works as follows:

- For each of the m many hypothesis tests performed, order the resulting p-values from least to greatest as

p1,p2,...,pm - For a given false-positive cutoff α (= 0.20 in our case), and a given ordered p-value index i, check whether pi

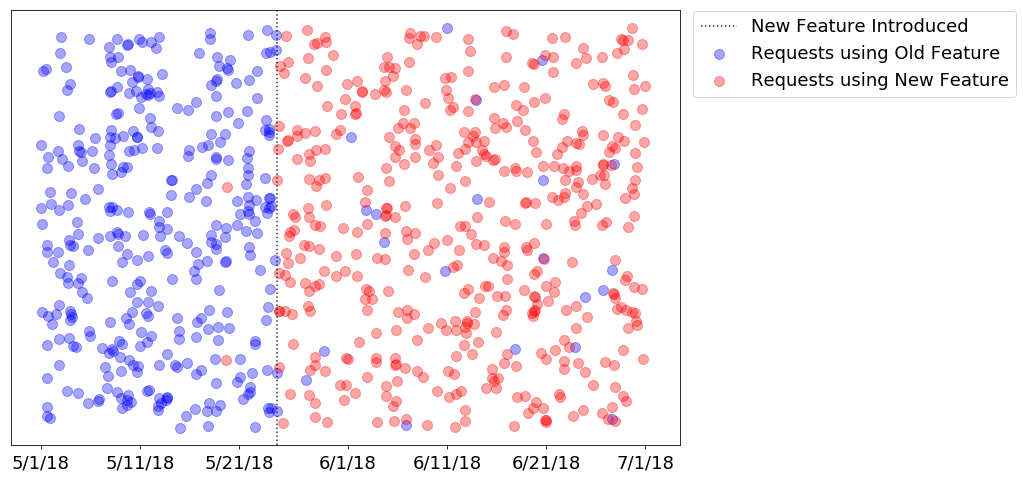

< α \* i / m - Find the largest i such that this inequality holds, and reject all null hypotheses corresponding to the p-values with indices up to and including i